Per-user Rate Limiting

The following policy is based on the Rate Limiting blueprint.

Overview

Rate limiting is a critical strategy for managing the load on an API. By imposing restrictions on the number of requests a unique consumer can make within a specific time frame, rate limiting prevents a small set of users from monopolizing the majority of resources on a service, ensuring fair access for all API consumers.

Aperture implements this strategy through its high-performance, distributed rate limiter. This system enforces per-key limits based on fine-grained labels, thereby offering precise control over API usage. For each unique key, Aperture maintains a token bucket of a specified bucket capacity and fill rate. The fill rate dictates the sustained requests per second (RPS) permitted for a key, while transient overages over the fill rate are accommodated for brief periods, as determined by the bucket capacity.

The diagram shows how the Aperture SDK interacts with a global token bucket to determine whether to allow or reject a request. Each call decrements tokens from the bucket and if the bucket runs out of tokens, indicating that the rate limit has been reached, the incoming request is rejected. Conversely, if tokens are available in the bucket, the request is accepted. The token bucket is continually replenished at a predefined fill rate, up to the maximum number of tokens specified by the bucket capacity.

Before exploring Aperture's rate limiting capabilities, make sure that you have signed up to Aperture Cloud and set up an organization. For more information on how to sign up, follow our step-by-step guide.

Rate Limiting with Aperture SDK

The first step to using the Aperture SDK is to import and set up Aperture Client:

- TypeScript

import { ApertureClient } from "@fluxninja/aperture-js";

// Create aperture client

export const apertureClient = new ApertureClient({

address: "ORGANIZATION.app.fluxninja.com:443",

apiKey: "API_KEY",

});

You can obtain your organization address and API Key within the Aperture Cloud

UI by clicking the Aperture tab in the sidebar menu.

The next step is making a startFlow call to Aperture. For this call, it is

important to specify the control point (rate-limiting-feature in our example)

and the labels that will align with the rate limiting policy, which we will

create in Aperture Cloud in one of the next steps.

- TypeScript

const flow = await apertureClient.startFlow("rate-limiting-feature", {

labels: {

user_id: "some_user_id",

},

grpcCallOptions: {

deadline: Date.now() + 1000, // ms

},

});

Now, the next part is assessing whether a request is permissible or not by

checking the decision returned by the ShouldRun call. Developers can leverage

this decision to either rate limit abusive users or to allow requests that are

within the limit. While our current example only logs the request, in real-world

applications, you can execute relevant business logic when a request is allowed.

It is important to make the end call made after processing each request, in

order to send telemetry data that would provide granular visibility for each

flow.

- TypeScript

if (flow.shouldRun()) {

console.log("Request accepted. Processing...");

} else {

console.log("Request rate-limited. Try again later.");

}

flow.end();

Create a Rate Limiting Policy

- Aperture Cloud UI

- aperturectl

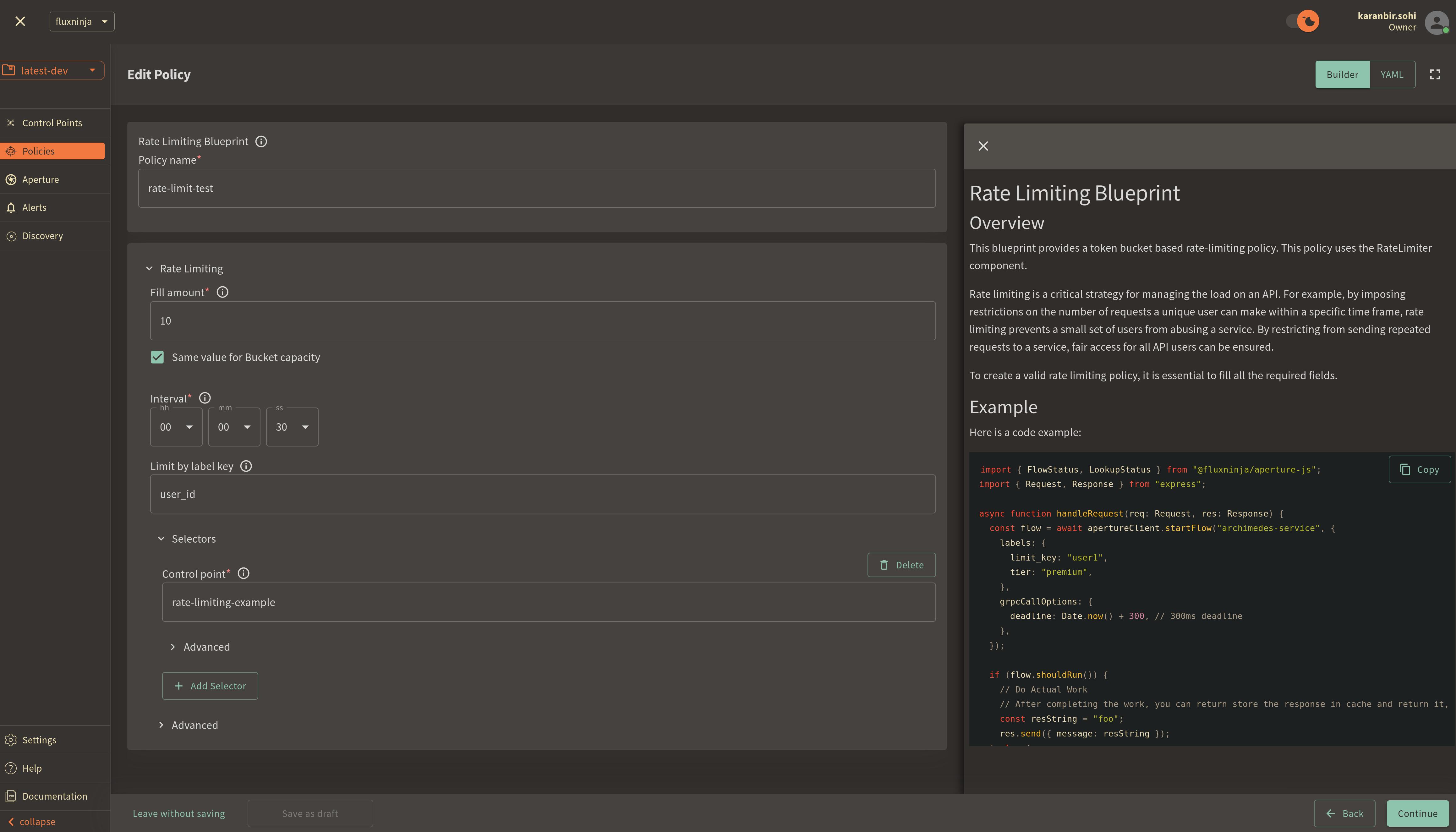

Navigate to the Policies tab on the sidebar menu, and select Create Policy

in the upper-right corner. Next, choose the Rate Limiting blueprint and complete

the form with these specific values:

Policy name: Unique for each policy, this field can be used to define policies tailored for different use cases. Set the policy name torate-limit-test.Fill amount: Configures the number of tokens added to the bucket within the selected interval. SetFill amountto10.Bucket Capacity: Defines the maximum capacity of the bucket in the rate limiter. Check the optionsame value for bucket capacity optionto set the same value for bucket capacity and fill amount.Interval: Specifies the time amount of timeFill amountwill take to refill tokens in the bucket. SetIntervalto30 seconds.Limit By Label Key: Determines the specific label key used for enforcing rate limits. We'll useuser_idas an example.Control point: It can be a particular feature or execution block within a service. We'll userate-limiting-featureas an example.

Once you've entered these six fields, click Continue and then Apply Policy

to finalize the policy setup.

For this policy, a unique token bucket is created for each user identified by

their user_id. Users are permitted to make up to 10 requests in a burst before

encountering rate limiting. As the bucket gradually refills with 10 requests

over a span of 30 seconds, users can make a restricted number of successive

requests.

If you haven't installed aperturectl yet, begin by following the Set up CLI aperturectl guide. Once aperturectl is installed, generate the values file necessary for creating the rate limiting policy using the command below:

aperturectl blueprints values --name=rate-limiting/base --output-file=rate-limit-test.yaml

Following are the fields that need to be filled for creating a rate limiting policy:

policy_name: Unique for each policy, this field can be used to define policies tailored for different use cases. Set the policy name torate-limit-test.fill_amount: Configures the number of tokens added to the bucket within the selected interval. SetFill amountto10.bucket_capacity: Defines the maximum capacity of the bucket in the rate limiter. Setbucket_capacityto10.interval: Specifies the time amount of timeFill amountwill take to refill tokens in the bucket. SetIntervalto30 seconds.limit_by_label_key: Determines the specific label key used for enforcing rate limits. We'll useuser_idas an example.control_point: It can be a particular feature or execution block within a service. We'll userate-limiting-featureas an example.

Here is how the complete values file would look:

# yaml-language-server: $schema=../../../../../blueprints/rate-limiting/base/gen/definitions.json

blueprint: rate-limiting/base

uri: ../../../../../blueprints

policy:

policy_name: "rate-limit-test"

rate_limiter:

bucket_capacity: 10

fill_amount: 10

parameters:

interval: 30s

limit_by_label_key: "user_id"

selectors:

- control_point: "rate-limiting-feature"

The last step is to apply the policy using the following command:

aperturectl cloud blueprints apply --values-file=rate-limit-test.yaml

For this policy, a unique token bucket is created for each user identified by

their user_id. Users are permitted to make up to 10 requests in a burst before

encountering rate limiting. As the bucket gradually refills with 10 requests

over a span of 30 seconds, users can make a restricted number of successive

requests.

Next, we'll proceed to run an example to observe the newly implemented policy in action.

Rate Limiting in Action

Begin by cloning the Aperture JS SDK.

Switch to the example directory and follow these steps to run the example:

- Install the necessary packages:

- Run

npm installto install the base dependencies. - Run

npm install @fluxninja/aperture-jsto install the Aperture SDK.

- Run

- Run

npx tscto compile the TypeScript example. - Run

node dist/rate_limit_example.jsto start the compiled example.

Once the example is running, it will prompt you for your Organization address and API Key. In the Aperture Cloud UI, select the Aperture tab from the sidebar menu. Copy and enter both your Organization address and API Key to establish a connection between the SDK and Aperture Cloud.

Monitoring Rate Limiting Policy

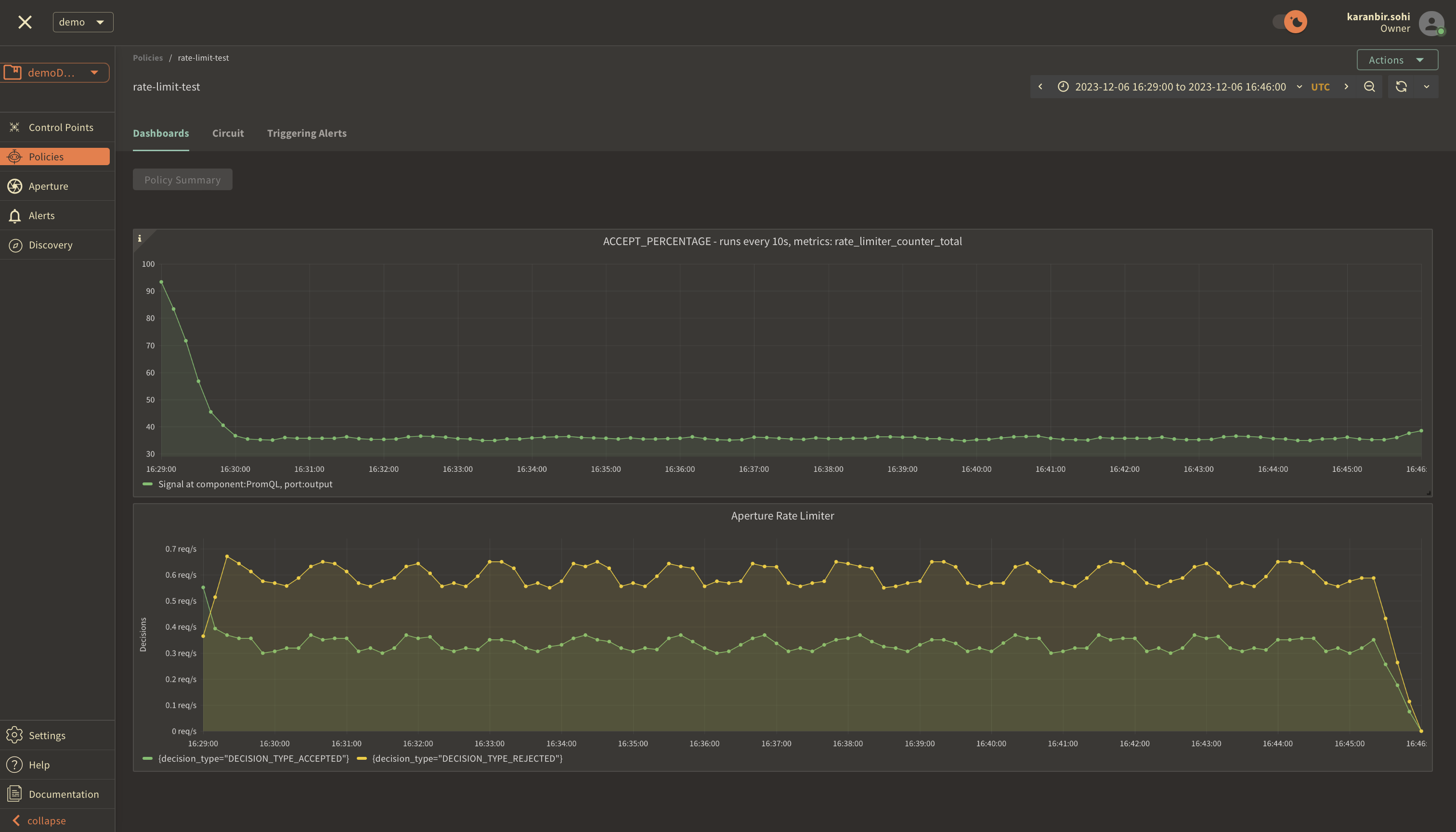

After running the example for a few minutes, you can review the telemetry data

in the Aperture Cloud UI. Navigate to the Aperture Cloud UI, and click the

Policies tab located in the sidebar menu. Then, select the rate-limiter-test

policy that you previously created.

Once you've clicked on the policy, you will see the following dashboard:

These two panels provide insights into how the policy is performing by monitoring the number of accepted and rejected requests along with the acceptance percentage. Observing these graphs will help you understand the effectiveness of your rate limiting setup and help in making any necessary adjustments or optimizations.