Introduction

Aperture is a distributed load management platform designed for rate limiting, caching, and prioritizing requests in cloud applications. Built upon a foundation of distributed counters, observability, and a global control plane, it provides a comprehensive suite of load management capabilities. These capabilities enhance the reliability and performance of cloud applications, while also optimizing cost and resource utilization.

Integrating Aperture in your application through SDKs is a simple 3-step process:

- Define labels: Define labels to identify users, entities, or features within your application. For example, you can define labels to identify individual users, features, or API endpoints.

Example

- Wrap your workload: Wrap your workload with

startFlowandendFlowcalls to establish control points around specific features or code sections inside your application. For example, you can wrap your API endpoints with Aperture SDKs to limit the number of requests per user or feature.

Example

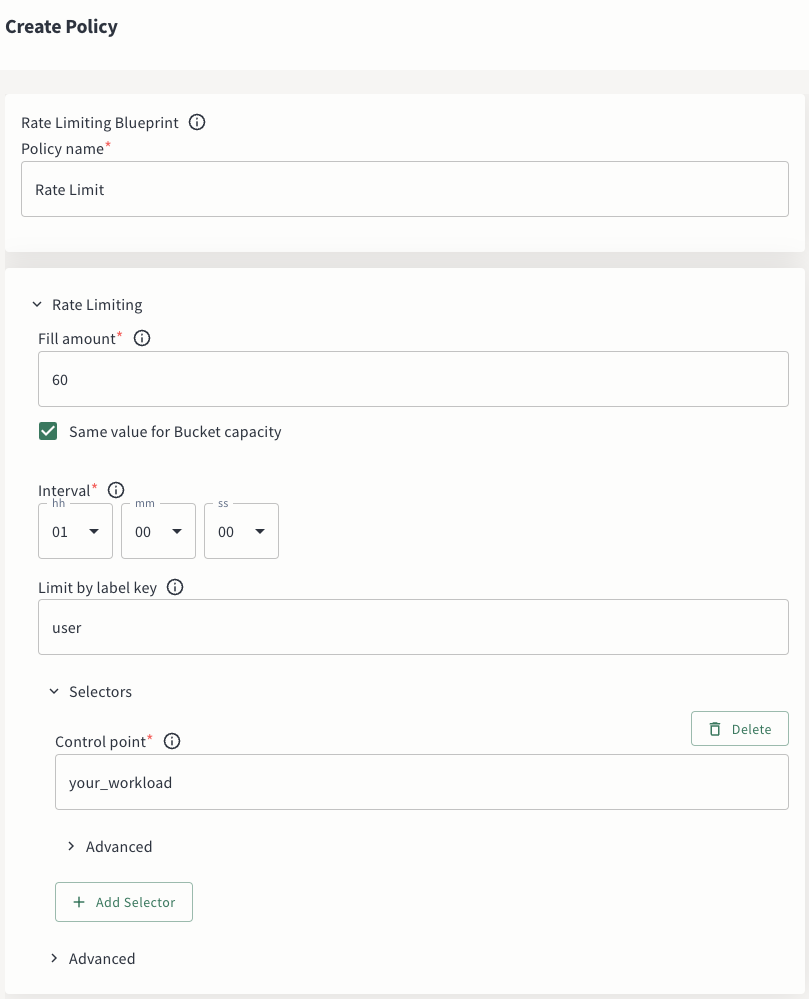

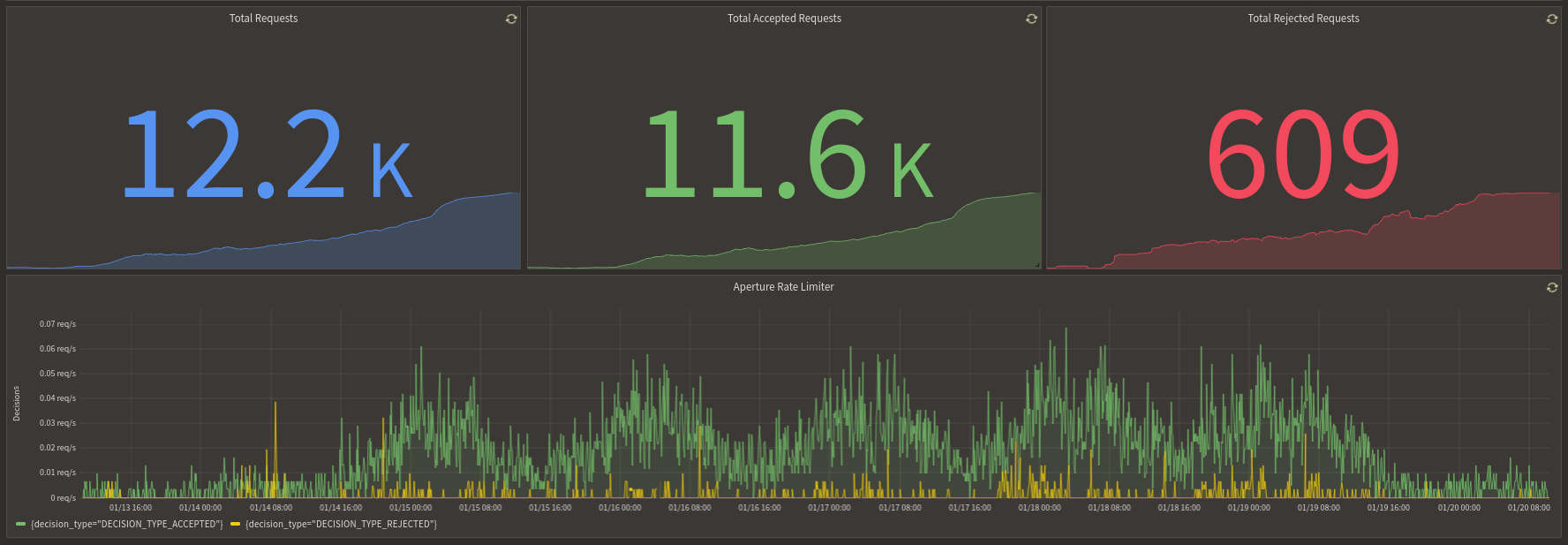

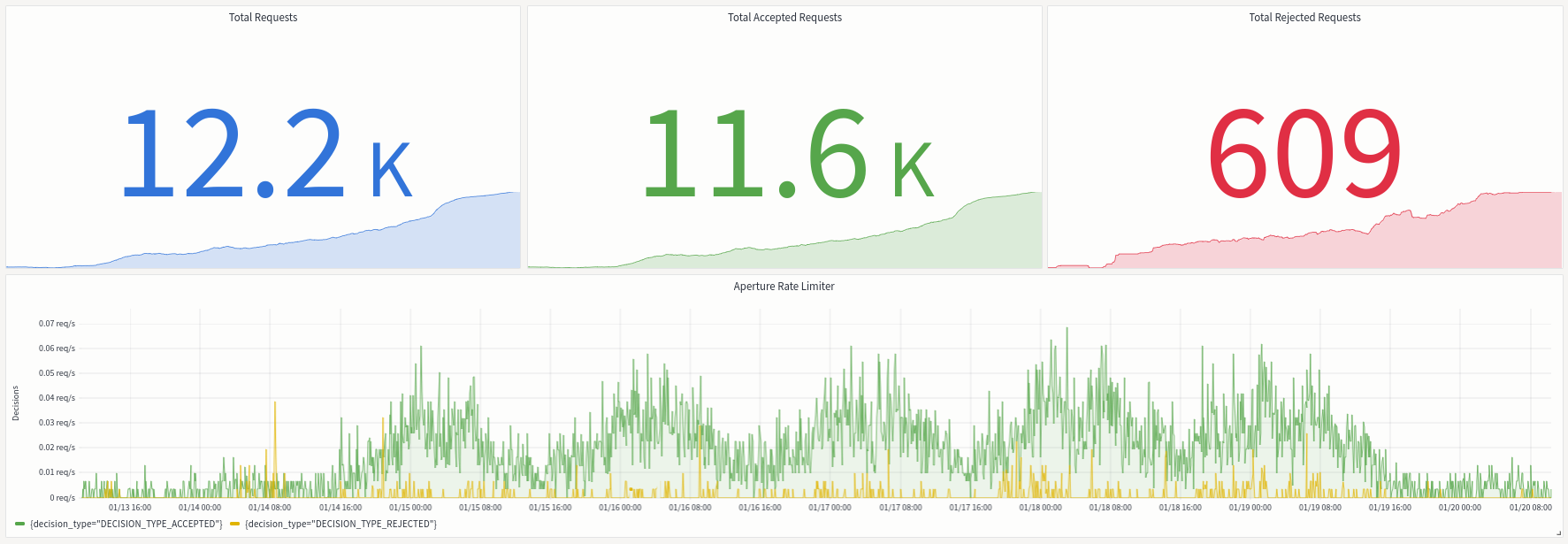

- Configure & monitor policies: Configure policies to control the rate, concurrency, and priority of requests.

Policy YAML

In addition to language SDKs, Aperture also integrates with existing control points such as API gateways, service meshes, and application middlewares.

Aperture is available as a managed service, Aperture Cloud, or can be self-hosted within your infrastructure. Visit the Architecture page for more details.

To sign up to Aperture Cloud, click here.

⚙️ Load management capabilities

- ⏱️ Global Rate-Limiting: Safeguard APIs and features against excessive usage with Aperture's high-performance, distributed rate limiter. Identify individual users or entities by fine-grained labels. Create precise rate limiters controlling burst-capacity and fill-rate tailored to business-specific labels. Refer to the Rate Limiting guide for more details.

- 📊 API Quota Management: Maintain compliance with external API quotas with a global token bucket and smart request queuing. This feature regulates requests aimed at external services, ensuring that the usage remains within prescribed rate limits and avoids penalties or additional costs. Refer to the API Quota Management guide for more details.

- 🚦 Concurrency Control and Prioritization: Safeguard against abrupt service overloads by limiting the number of concurrent in-flight requests. Any requests beyond this limit are queued and let in based on their priority as capacity becomes available. Refer to the Concurrency Control and Prioritization guide for more details.

- 🎯 Workload Prioritization: Safeguard crucial user experience pathways and ensure prioritized access to external APIs by strategically prioritizing workloads. With weighted fair queuing, Aperture aligns resource distribution with business value and urgency of requests. Workload prioritization applies to API Quota Management and Adaptive Queuing use cases.

- 💾 Caching: Boost application performance and reduce costs by caching costly operations, preventing duplicate requests to pay-per-use services, and easing the load on constrained services. Refer to the Caching guide for more details.

✨ Get started

📖 Learn

The Concepts section provides detailed insights into essential elements of Aperture's system and policies, offering a comprehensive understanding of their key components.

Additional Support

Don't hesitate to engage with us for any queries or clarifications. Our team is here to assist and ensure that your experience with Aperture is smooth and beneficial.

💬 Consult with an expert | 👥 Join our Slack Community | ✉️ Email: support@fluxninja.com