PostgreSQL

Overview

The PostgreSQL primary server process forks a new process every time a client requests a connection. PostgreSQL sets a connection limit, which determines the maximum number of connections that can be opened to the backend at any point of time.

In scenarios where the number of connections exceeds the limit, the PostgreSQL server will reject the connection request along with a relevant error on the client side. Such scenarios can indicate that client applications are sending long-running or heavy queries to the database and constantly creating new connections instead of reusing existing connections, which can lead to an overload scenario.

This policy is designed to detect overload in PostgreSQL database based on real-time percentage of active connections against the maximum number of connections.

Configuration

This policy is built using the PostgreSQL blueprint.

The below values.yaml file can be generated by following the steps in the

Installation section.

- aperturectl values.yaml

# yaml-language-server: $schema=../../../../../../blueprints/load-scheduling/postgresql/gen/definitions.json

blueprint: load-scheduling/postgresql

uri: ../../../../../../../blueprints

policy:

policy_name: postgres-connections

resources:

flow_control:

classifiers:

- selectors:

- agent_group: default

service: service1-demo-app.demoapp.svc.cluster.local

control_point: ingress

rules:

user_type:

extractor:

from: request.http.headers.user-type

postgresql:

agent_group: default

endpoint: postgresql.postgresql.svc.cluster.local:5432

username: postgres

password: secretpassword

databases:

- "postgres"

tls:

insecure: true

connections_used_threshold: 40

load_scheduling_core:

aiad_load_scheduler:

alerter:

alert_name: AIAD Load Throttling Event

load_multiplier_linear_decrement: 0.2

load_multiplier_linear_increment: 0.05

load_scheduler:

workload_latency_based_tokens: true

selectors:

- agent_group: default

control_point: ingress

service: service1-demo-app.demoapp.svc.cluster.local

scheduler:

workloads:

- label_matcher:

match_labels:

user_type: "guest"

parameters:

priority: 50.0

name: "guest"

- label_matcher:

match_labels:

http.request.header.user_type: "subscriber"

parameters:

priority: 250.0

name: "subscriber"

dry_run: false

Generated Policy

Circuit Diagram for this policy.

Installation

Generate a values file specific to the policy. This can be achieved using the command provided below.

aperturectl blueprints values --name=load-scheduling/postgres --version=v2.34.0 --output-file=values.yaml

Apply the policy using the aperturectl CLI or kubectl.

- aperturectl (Aperture Cloud)

- aperturectl (self-hosted controller)

- kubectl (self-hosted controller)

aperturectl cloud blueprints apply --values-file=values.yaml

Pass the --kube flag with aperturectl to directly apply the generated policy

on a Kubernetes cluster in the namespace where the Aperture Controller is

installed.

aperturectl blueprints generate --values-file=values.yaml --output-dir=policy-gen

aperturectl apply policy --file=policy-gen/policies/postgres-connections.yaml --kube

Apply the generated policy YAML (Kubernetes Custom Resource) with kubectl.

aperturectl blueprints generate --values-file=values.yaml --output-dir=policy-gen

kubectl apply -f policy-gen/policies/postgres-connections-cr.yaml -n aperture-controller

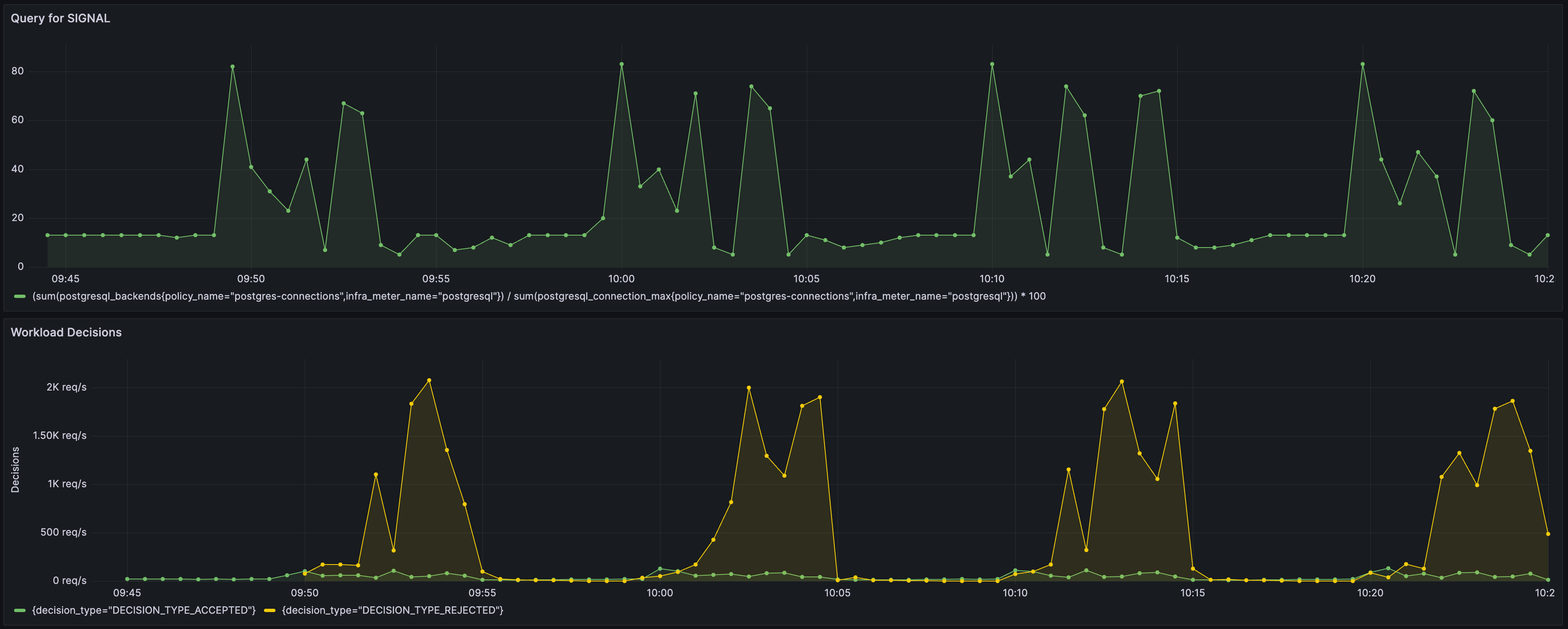

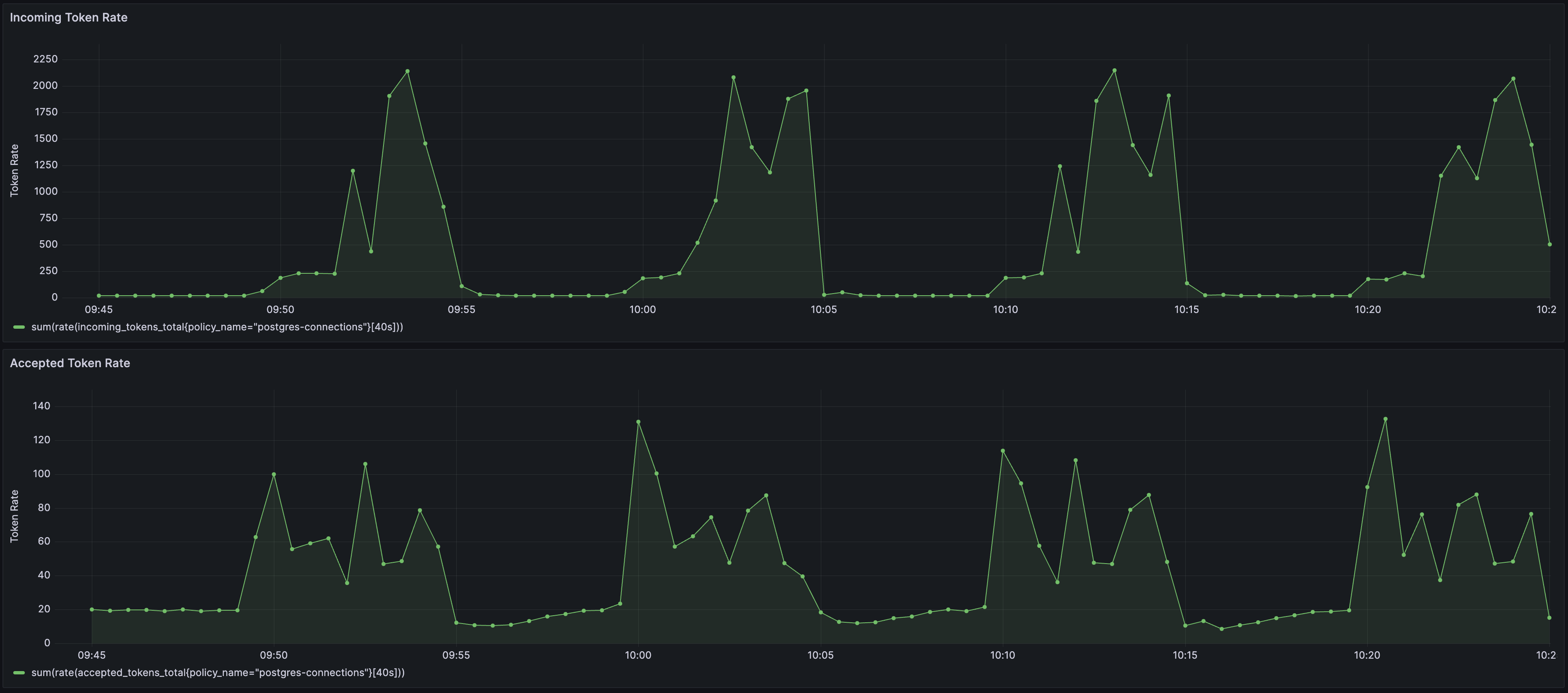

Policy in Action

To see the policy in action, the traffic is generated such that it starts within the PostgreSQL's max connection limit and then goes beyond the capacity after some time. Such a traffic pattern is repeated periodically.